HTML

-

自动化生产线上的工件姿态测量及抓取是重要工艺环节。在姿态变换实验平台的研究方面,HUANG[1]等人采用欧拉角物体姿态对机器人的空间运动进行描述。JIANG等人[2]研究了海豚尾鳍俯仰-沉浮运动的数学模型,并建立了描述豚尾各运动参量之间关系的运动学方程。CHEN等人[3]提出了圆结构光视觉姿态测量模型,基于单目视觉和激光圆结构光实现了目标姿态测量。YU等人[4]采用中轴线法对火箭空间轴对称目标的俯仰角和偏航角进行测量,并进行了误差分析;利用目标图像信息提高测量精度,采用间接方法提取目标中轴线,避免了多相机目标匹配问题。LI等人[5]对相机系统进行了标定,并用单相机对六自由度(six-degree of freedom,6-DOF)机构的6个自由度进行了基于图像的位移测量;将6个自由度位移的仿真结果作为理想数据与实测数据进行比较获得测量误差。

国外学者在20世纪80年代前后,提出了姿态测量中的透视n点定位问题(perspective-n-point, PNP)。该方法为基于单帧图像的姿态解算方法:已知被测目标物体上n个特征点中任意两个点间的对应坐标关系,根据摄像机的成像模型可以确定目标物体上这n个特征点在摄像机坐标系中的坐标,再利用标定后的摄像机内外参量求出特征点在世界坐标系中的坐标值,最终解算出目标物体的姿态参量。1981年, FISCHLER和BOLLES建立了数学模型,提出了一种封闭式解法,为视觉姿态测量提供了理论基础[6]。2009年, LEPETIT等人提出了一种对N≥4的PNP问题均适用的解算方法,将所有特征点表示为4个虚拟控制点的PNP问题非迭代算法,将PNP问题简单地转换为估计解算4个虚拟控制点在摄像机坐标系的坐标值问题[7]。STEWÉNIUS等人[8]提出了5点相对姿态的定位算法,且该方法多数情况下优于直接法。

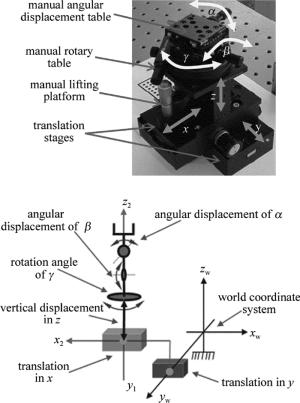

为实现工件的姿态变换,并测量不同变换条件下对应的工件姿态参量,设计了6-DOF姿态变换实验平台,实验平台具有两种基本的实验功能:工件的各种姿态变换,由变换平台3个平移自由度及3个旋转自由度组合实现;视觉测量系统,由单目相机配合激光结构光实现。

构建的实验平台由x方向平移台、y方向平移台及z方向升降台组成位置调节部件;由γ方向水平旋转台、β方向俯仰转角台及α方向侧倾转角台构成姿势调节部件,通过滑动副及旋转副的组合运动,实现工件的不同姿态变换。在分析Denavit-Hartenberg(D-H)模型原理基础上,构建了姿态变换实验平台的运动学数学模型,求得了D-H模型参量及姿态传递变换矩阵;同时,依据小孔成像机理,构建了视觉系统内外参量模型,为基于实验平台的工件姿态视觉测量提供了条件[9-10]。通过对环形激光结构光图像的分割,获得环形光条,并用光条图像的法向量结合坐标系间的转换,得到工件表面在世界坐标系中的法向量,进而反演实现工件姿态的计算。

-

六自由度姿态模拟平台如图 1所示。实验平台由x方向平移台、y方向平移台、z方向升降台组成位置调节系统;由γ方向360°旋转台、β方向转角台、α方向转角台构成姿势调节系统。

由3个直线运动、3个旋转运动的组合,具备位置及姿态调整功能,末端工作平面可模拟空间限定范围内的任意位置、任意姿态。姿态变换平台与视觉系统相结合,可实现不同姿态下的工件视觉识别及视觉测量等功能。

图 2为姿态变换平台6个自由度的功能部件组合及其坐标系示意图。主要技术参量为:绕x轴的旋转角度α=±15°,分辨率0.1°;绕y轴旋转的角度β=±15°,分辨率0.1°;绕z轴旋转的角度γ=360°,分辨率0.1°。沿x轴方向的直线运动范围为75mm,分辨率0.01mm;沿y轴方向的直线运动范围为75mm,分辨率0.02mm;沿z轴方向的直线运动范围为13mm,分辨率0.01mm。

-

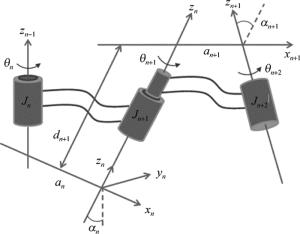

D-H方法适用于任何由关节和连杆组成的机器人模型构建,而机器人本质上大多由一组关节和连杆构成。当所有关节变量为已知时,可用正运动学模型确定机器人末端位姿;相反,若对末端位置及姿态有特定要求,则可用逆运动学实现对每一关节变量的逆向求解。

-

六自由度模拟平台可以抽象为连杆-关节机器人结构,从而应用D-H方法进行模型构建。正常情况下,机器人的每个关节有一个自由度,可为滑动的或转动的。图 3为典型的用D-H法表示的机器人连杆-关节模型结构。图中连续的3个关节由两个连杆连接, 关节分别为Jn, Jn+1及Jn+2,连杆分别为n及n+1。3个旋转关节的z轴与关节旋转方向之间遵循右手螺旋法则,旋转角θ为关节变量;若为滑动关节,则沿z轴的连杆滑动长度定义为关节变量[11-12]。关节的x轴一般定义在两个相邻关节轴线的公垂线上。图中,关节Jn与Jn+1之间的关节偏移量为an,关节Jn+1与Jn+2之间的关节偏移量为an+2;两相邻的公垂线之间的距离为d,两相邻的关节的z轴之间夹角定义为关节扭转角,分别为αn和αn+1。

为实现关节之间的变换,在每一个关节上建立一个参考坐标系。从关节Jn+1到关节Jn+2的变换过程为[13-14]:(1)关节Jn+2绕关节Jn+1旋转θn+1角度,有旋转算子rot(zn, θn+1),使得xn轴与xn+1轴平行, 此时xn轴与xn+1轴在同一平面上,将xn+1轴平移dn+1,有平移算子trans(0, 0, dn+1),即可使二者重叠; (2)将关节Jn+1沿xn轴平移an+1,记为trans(an+1, 0, 0),使得xn轴与xn+1轴的坐标原点重合; (3)通过旋转αn+1角度,将zn轴绕xn+1轴转到与zn+1轴重叠,记为rot(x, an+1), 此时,关节Jn+1的坐标系与关节Jn+2的坐标系重合,实现了坐标系之间的转换。

从关节Jn+1到关节Jn+2的变换矩阵记作nTn + 1,对应的矩阵An+1由上述变换过程各自对应的矩阵右乘得到,有:

对于具有6个关节的机器人,从机器人的基座R开始,到机器人手爪末端的变换过程可表示为:

可知,具有6个自由度的机器人,每个自由度对应一个A矩阵。

-

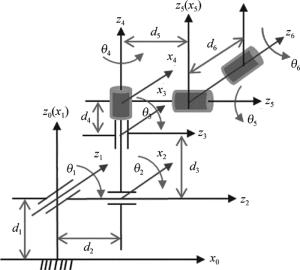

六自由度姿态变换平台坐标系如图 4所示。坐标系由3个滑动副、3个转动副组成。每两个运动副公垂线之间的距离分别为d1, d2, d3, d4, d5, d6。按右手螺旋法则,确定关节变量旋转角θ1~θ6及两相邻关节的z轴之间关节扭转角α0~α5。

由坐标系,得到姿态变换平台从平台的基座到末端之间变换的D-H参量表,如表 1所示。

connecting rod

iangle

θi/(°)spacing

diconnecting rod

length ai-1/mmtwist angle

αi-1/(°)1 -90 0 0 -90 2 -90 d2 0 -90 3 0 d3 0 -90 4 0 d4 0 0 5 90 d5 0 90 6 0 d6 0 90 Table 1. D-H parameter table of attitude simulation experiment platform

由D-H参量表,求得各个关节的变换矩阵分别为:

由(3)式可得基座R到末端H总的变换矩阵为:

2.1. 机器人关节变换的D-H建模原理

2.2. 六自由度模拟平台D-H运动学模型

-

姿态变换平台的视觉系统采样单目相机结合激光结构光结构,可实现对平台上的工件进行姿态识别及尺寸测量。相机的单目小孔成像模型如图 5所示。图中,xwywzw为世界坐标系,相机光轴中心点O与xc, yc, zc轴构成相机直角坐标系,相机等效成像平面Γ的坐标原点O0是以像素为单位的图像坐标系的原点,(u, v)为图像像素坐标系中任一点的坐标,O1是以mm为单位的图像坐标系原点,其像素坐标记为(u0, v0)。OO1即为相机焦距f。设景物点p1在相机坐标系下的坐标为(xc,yc,zc),p1点在成像平面上的成像点p2的坐标为(X,Y,Z)[15-16]。

采用齐次坐标与矩阵形式,成像平面上的任一点在u-O0-v及X-O1-Y坐标系间的坐标关系可表示为:

景物点p1与其成像点p2间的坐标关系为:

由(5)式将成像平面上的成像点(X,Y)转换为图像点(u, v),再代入(6)式,得到相机内参量模型:

式中,${k_X} = \frac{f}{{{\rm{d}}X}}$是X轴方向的放大系数;${k_Y} = \frac{f}{{{\rm{d}}Y}}$是Y轴方向的放大系数;Min是相机内参量矩阵,描述工件表面点与图像点间的关系。外参量模型描述工件表面点坐标与相机坐标间的关系,坐标系xw-Ow-yw-zw在坐标系xc-O-yc-zc中的关系表达式构成相机外参量模型:

式中,(xw,yw,zw)为景物点在世界坐标系中的坐标;Mw是摄像机外参量矩阵;n =[nx ny nz]T为xw轴在相机坐标系xc-O-yc-zc中的方向向量;o =[ox oy oz]T为yw轴在相机坐标系xc-O-yc-zc中的方向向量;为旋转矩阵,a =[ax ay az]T是zw轴在相机坐标系xc-O-yc-zc中的方向向量;p =[px py pz]T为世界坐标系xw-Ow-yw-zw的坐标原点在相机坐标系xc-O-yc-zc中的平移量[17-18]。

-

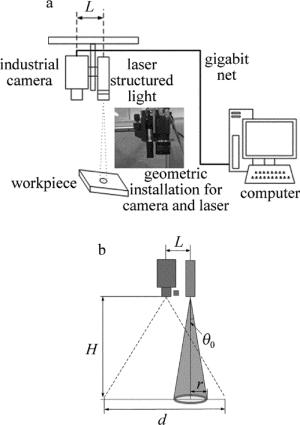

为测量工件在变换平台上的姿态,采用圆结构光激光器、相机构成结构光系统,如图 6所示。图 6a为激光结构光视觉测量原理及相机与激光器之间的几何安装结构;图 6b为相机与激光投射器的整体几何模型。为保证激光投射器轴线与摄像机的光轴平行,采用特制的安装底板,将相机与激光器分别固定在底板的两侧,保证二者之间的几何尺寸。

图 6中,相机光轴与激光圆环轴心间距为L,圆环激光出射半角为θ0,相机物距为H,激光投射器在工件表面投影为圆环形结构光条,半径为r,并有:

在构建相机与激光投射器相对位置时,要保证激光圆环在视场范围内,要求相机光轴与激光圆环轴心间距L满足:

式中,S为镜头靶面尺寸,f为镜头焦距。

空间工件的姿态由横滚角、俯仰角和偏转角3个自由度确定。设nw=(nw, x, nw, y, nw, z)为空间工件表面的法向量,根据几何三角关系可推算出工件的姿态参量横滚角θ、俯仰角φ和偏转角ξ:

激光圆环光条中心坐标(x0′, y0′, z0′)及光条法向量n′=(nx′, ny′, nz′)分别为:

3.1. 小孔成像模型

3.2. 环形激光结构光系统

-

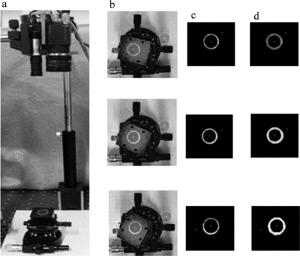

实验时,将工件置于姿态变换实验平台上,调节姿态变换平台的3个姿态角参量进行测量,如图 7a所示。图 7b为采集的3种不同姿态的工件图像。图 7c为利用阈值分割及形态学膨胀处理方法将激光环形光条区域从工件图像中分割出来。对结构光光条区域应用Hessian矩阵提取结构光光条中心的亚像素坐标,再利用最小二乘椭圆拟合法将转换到成像平面坐标系中的亚像素中心坐标拟合成椭圆方程,见图 7d。最后由椭圆拟合结果测算工件姿态。

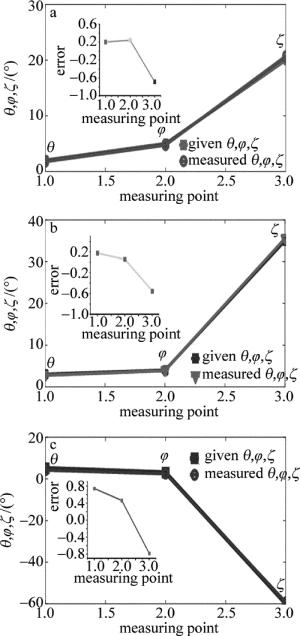

被测工件的姿态参量(θ, φ, ξ)实验测量值如表 2所示。经实验可得姿态角的测量值与给定值间的平均误差为:姿态参量的横滚角θ平均误差0.373°,俯仰角φ平均误差0.253°,偏转角ξ平均误差0.673°。

No. given value (θ, φ, ξ)/(°) normal vector measured value (θ, φ, ξ)/(°) 1 (2.0, 5.0, 20.0) (0.0831, 0.0314, 0.9965) (1.80, 4.76, 20.69) 3 (3.0, 4.0, 35.0) (0.0687, 0.0491, 0.9964) (2.82, 3.94, 35.55) 5 (5.0, 3.0, -60.0) (-0.0442, 0.0742, 0.9960) (4.26, 2.54, -59.22) Table 2. Measurement results for workpiece attitude

图 8为工件在实验台上给定的姿态角及实际测量的姿态角及对应点的测量误差。从实验结果看,被测工件的姿态测量值与真实值基本相同,但存在一定误差,影响姿态测量结果的误差来源于摄像机与圆结构光投射器之间的支架误差、结构光光条中心亚像素坐标的提取误差等。

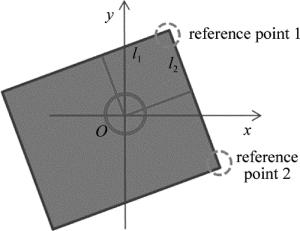

图 9为工件位移测量原理。图中O为激光结构光几何中心,l1, l2分别为点O到参考点1所在边的相对位移。若参考点1不在y轴右侧,则测量点O到参考点2所在边的相对位移。

-

在实验室环境下搭建了六自由度姿态变换实验平台,为了建立姿态变换实验平台的机构模型及视觉系统模型,实现工件变姿态的视觉识别与测量,完成了如下主要工作:分析了机器人关节变换D-H建模原理基础上,构建了六自由度模拟平台的D-H运动学模型,导出了六自由度姿态变换平台的D-H参量表,并求出了实验平台基座到平台末端总的变换矩阵;应用小孔成像原理,建立了六自由度姿态变换平台视觉系统模型,为基于变换实验平台的工件姿态测量奠定了基础。

对激光环形光条图像进行在成像平面的数学表达式推导,得到了工件表面在摄像机坐标系中的法向量,通过坐标系之间的转换得到工件表面在世界坐标系中的法向量,进而推算出工件的姿态参量横滚角θ、俯仰角φ和及偏转角ζ。实验结果表明,3个姿态角的测量值平均误差均小于1°,被测工件的姿态测量值与真实值基本相同。

Map

Map

DownLoad:

DownLoad: