HTML

-

自主移动机器人是一种能够在各种道路和野外环境下,实现连续、实时地自主运行的智能机器人。在工业生产、未知环境探测、医学、无人驾驶飞机及汽车、危险场合的自动作业、军事国防等领域都有着广泛的应用前景。

对自主移动机器人来说,最关键的问题就是导航,即在具有障碍物的未知环境中,获得有效而可靠的环境信息,进行障碍物检测与识别,并按一定的评价标准实现机器人从起点到目标点的无碰撞运动。目前,雷达导航需要进行平面或者3维扫描,在野外地形复杂、路面高低不平的情况下,由于车体的剧烈颠簸,会出现严重的障碍物漏检和虚报现象;深空探索机器人、月球车等完全没有全球定位系统(global positioning system, GPS)信息的支持,无法用GPS进行导航,视觉导航得到广泛应用[1-2]。

但是,在黑暗环境中,自主移动机器人携带能源有限、无法采用外来光源照明的情况下,如何进行自主导航,相应的研究较少,参考文献[3]~参考文献[5]中采用单摄像机的线结构光视觉实现障碍物检测,根据光条在图像中的位置变化,跟踪运动目标的移动实现避障,但没有研究机器人自定位和3维建图。该项目提出一种在黑暗环境中基于光栅投射立体视觉的自主移动机器人导航方法,实现机器人自主导航中避障、及时定位与建图和视觉里程计研究。

立体视觉导航[6-7]能利用环境光(主要是太阳光)由传感器直接感受周围场景的信息,由于其具有仿人眼视觉进行信息采集和处理,且能有效地节约探测车携带的有限能源的显著优点,在空间探测车、火星车和月球车等方面有着广泛的应用。

作者针对立体视觉不能解决黑暗环境中自主移动机器人的导航,且需要进行图像匹配[8-9],而匹配困难大、精度不高的问题,采用光栅投射[10-12]和立体视觉[13]相结合的模型,提出一种基于光栅投射立体视觉的黑暗环境中自主移动机器人导航视觉传感器方法,只需要在间隔时间处发射光栅条纹,同时用摄像机采集一幅图,就可以解决黑暗环境下机器人自主导航中避障、即时定位与建图(simultaneous localization and mapping, SLAM)[14]和视觉里程计[15]等问题。

-

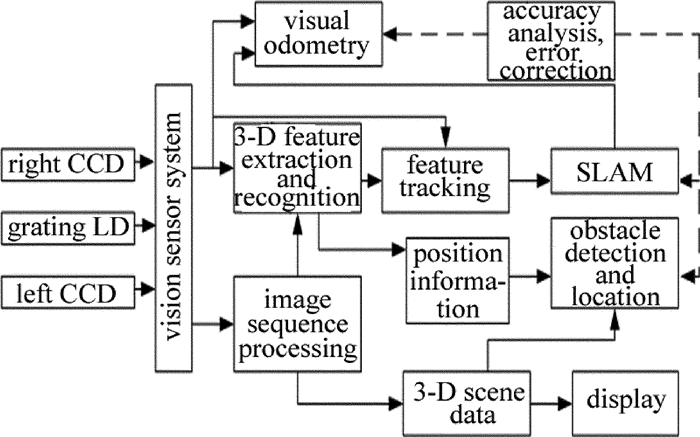

自主移动机器人的视觉传感器导航原理框图如图 1所示。首先通过光栅投射和立体视觉相融合的方法,建立光栅投射立体视觉传感器几何和数学模型,实现视觉传感器模型;然后在机器人移动过程中及时恢复出各个场景的3维坐标,建立可靠、真实的障碍物检测和分析方法;再进行运动目标的跟踪识别;最后根据运动目标移动信息与车体的姿态、朝向以及行驶距离等信息之间的关系,实现机器人的运动估计和精确定位。其中, CCD为电荷耦合器件(charge-coupled device), LD为半导体激光器(laser diode)。

-

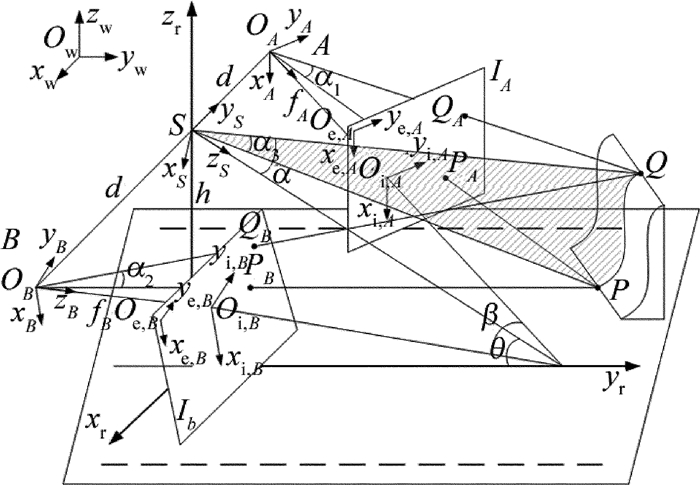

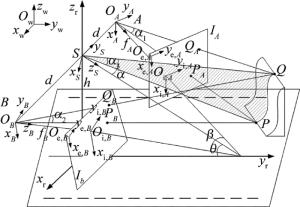

光栅投射立体视觉传感器由1个光栅投射装置(见图 2中的S点)和2个摄像机(见图 2中的A点和B点)构成,包括世界坐标系(Ow-xwywzw)、机器人坐标系(Or-xryrzr)、摄像机坐标系(OA-xAyAzA,OB-xByBzB)、图像坐标系(Oi, AXi, AYi, A,Oi, BXi, BYi, B)、离散像素坐标系(Oe, A, Xe, AYe, A, Oe, B, Xe, BYe, B)和光栅投射坐标系(OS-xSySzS)。拟建立的“光栅投射立体视觉”几何模型如图 2所示。

-

在几何模型的基础上,根据三平面(即光栅条纹面SPQ,摄像机空间平面OAPQ和OBPQ)相交求交汇的方法建立光栅投射立体视觉的数学模型。图 2中投射的条纹面方程为:

将条纹面方程转化到世界坐标中,则有:

式中, RS, w和TS, w分别为从光栅投射坐标系到世界坐标系的旋转矩阵和平移矩阵,其12个参量通过标定获得。

设摄像机空间平面OAPQ方程为:

式中, A1, B1, C1, D1为系数。根据摄像机的透视变换原理,可以建立离散像素坐标系Oe, AXe, AYe, A与摄像机坐标系OA-xAyAzA之间的关系,有:

式中, λ为比例因子,fA和fB分别为摄像机A和B两点的焦距,u0和v0为摄像机像的中心点坐标,uA和vA为A点像面的离散像素坐标。参量需要经过标定获得。将OA, PA, QA 3点在离散像素坐标系中的坐标代入(2)式,求解出方程中A1, B1, C1, D1值。同理,摄像机空间平面OBPQ方程为:

同理,A2, B2, C2, D2均为系数。摄像机坐标系与世界坐标系之间的关系为:

式中, RA, w,RB, w和TA, w,TB, w分别为从摄像机坐标系到世界坐标系的旋转矩阵和平移矩阵,其24个参量通过标定获得。

将(3)式、(5)式和(6)式联立,得到两摄像机空间平面在世界坐标系中的方程;将(1)式和(2)式联立,得到光栅条纹平面在世界坐标系中的方程。由于空间P点和Q点一定位于这两个联立方程中,解这两个联立方程即可得到空间曲线PQ的方程,并求解出P点, Q点在世界坐标系中的坐标值。

2.1. 光栅投射立体视觉几何模型

2.2. 光栅投射立体视觉数学模型

-

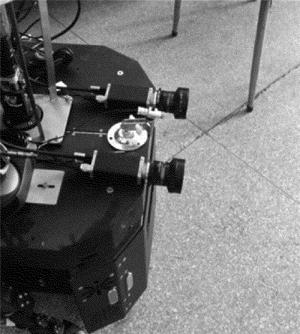

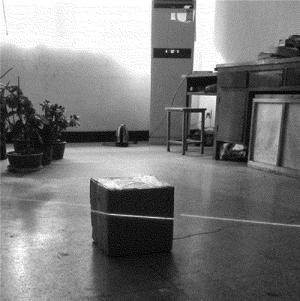

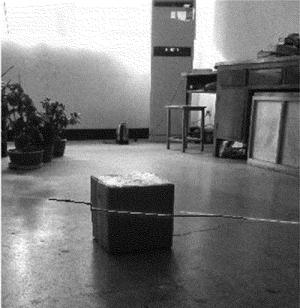

视觉传感器布置方案:选用两个CCD镜头以任意夹角水平放置,光栅投射仪置于两镜头之间,实验证明,两光轴以任意夹角放置非常便于视觉系统的设计安装,且扩大了摄像机的共同视场,并在环境较恶劣,机器人有大的震动时,可以保证测量的精度和鲁棒性。该视觉系统安装于机器人的上部,且两摄像机和光栅条纹仪的光轴稍向下倾斜,与地面成一定角度,如图 3所示。在机器人运动过程中,光栅条纹仪每隔一定时间向机器人前方投射光栅条纹,同时两摄像机采集受到机器人周围场景调制的变形条纹图像,如图 4所示。

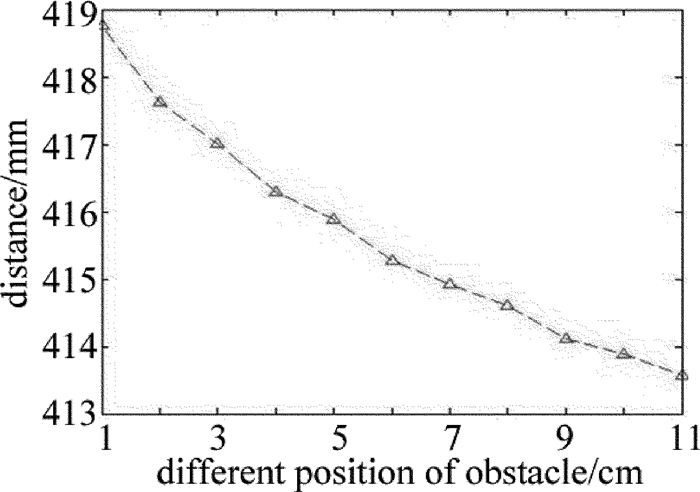

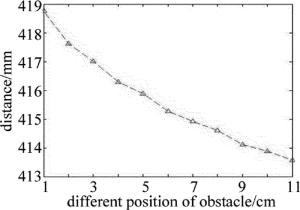

然后分别对左右图像进行结构光线条提取,可以获得室内场景中的障碍物高度图,如图 5所示。并计算出该障碍物与机器人之间的距离,利用此信息进行机器人定位和路径规划, 如图 6所示。

从障碍物不同位置相对于相机的距离信息可知,该方法距离计算精度达到0.8mm,有利于目前黑暗环境中机器人无法自主导航难题的突破,为暗环境中无GPS支持的机器人导航提供基础探索。

-

提出利用光栅投射立体视觉传感器来解决机器人导航问题,该传感器既规避了双目立体视觉的立体匹配这一难题,又规避了光栅投射的相位展开这一难题,仅采用三平面求交汇的方法实现场景空间内3维坐标计算,非常适合处理快速、实时的障碍物检测、定位、视觉里程计问题,可以应用于无GPS信号支持的野外黑暗环境的机器人导航,为机器人导航提供鲁棒性高、精度高以及实时性好的新方法。实验结果证明,该方法对于图像计算的精度在亚像素级,距离计算精度达到0.8mm。该项目的研究将开创在黑暗环境中机器人视觉导航研究及应用,在工业生产、未知环境探测、无人驾驶飞机及汽车、危险场合的自动作业、军事国防领域的灾害救援、巡逻机器人哨兵、排雷机器人、深空探索机器人等领域都有着广泛的应用前景。

Map

Map

DownLoad:

DownLoad: